第五章 PyTorch 優化模組

第五章簡介

本章開始介紹模型優化過程中涉及的三大概念:損失函數、優化器和學習率調整。

由於損失函數、優化器、學習率調整的方法有非常多,僅pytorch官方實現(V1.10)的就有二十一個損失函數,十三個優化器,十四個學習率調整方法。

這幾十種方法不會對每一個進行詳細介紹,主要通過幾個核心的方法為案例,進行剖析各模組的機制,如損失函數的Module如何編寫、pytorch是如何構建loss.py體系、優化器如何更新模型中的參數、優化器常用函數、學習率調整機制等內容。

相信瞭解上述機制,便可舉一反三,掌握更為複雜的方法函數。

5.1 二十一個損失函數

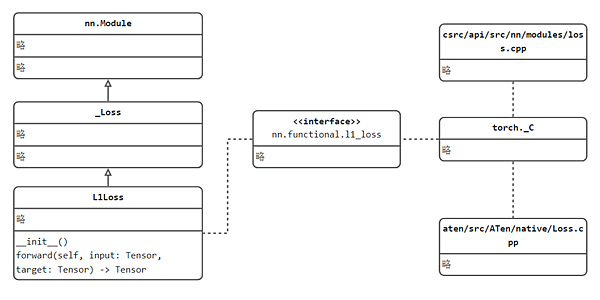

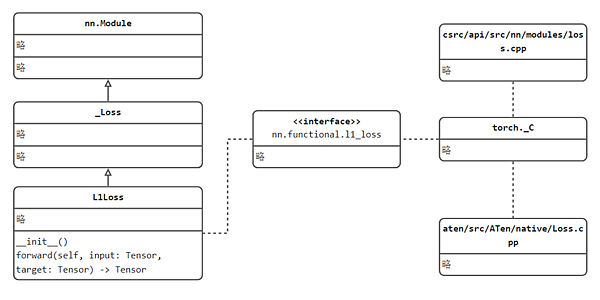

本節重點為pytorch損失函數實現方式及邏輯,而非具體某個損失函數的公式計算,核心為下圖:

損失函數——Loss Function

損失函數(loss function)是用來衡量模型輸出與真實標籤之間的差異,當模型輸出越接近標籤,認為模型越好,反之亦然。因此,可以得到一個近乎等價的概念,loss越小,模型越好。這樣就可以用數值優化的方法不斷的讓loss變小,即模型的訓練。

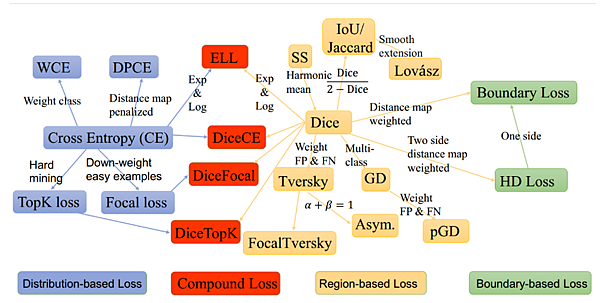

針對不同的任務有不同的損失函數,例如回歸任務常用MSE(Mean Square Error),分類任務常用CE(Cross Entropy),這是根據標籤的特徵來決定的。而不同的任務還可以對基礎損失函數進行各式各樣的改進,如Focal Loss針對困難樣本的設計,GIoU新增相交尺度的衡量方式,DIoU新增重疊面積與中心點距離衡量等等。

在pytorch中提供了二十一個損失函數,如下所示

nn.L1Loss

nn.MSELoss

nn.CrossEntropyLoss

nn.CTCLoss

nn.NLLLoss

nn.PoissonNLLLoss

nn.GaussianNLLLoss

nn.KLDivLoss

nn.BCELoss

nn.BCEWithLogitsLoss

nn.MarginRankingLoss

nn.HingeEmbeddingLoss

nn.MultiLabelMarginLoss

nn.HuberLoss

nn.SmoothL1Loss

nn.SoftMarginLoss

nn.MultiLabelSoftMarginLoss

nn.CosineEmbeddingLoss

nn.MultiMarginLoss

nn.TripletMarginLoss

nn.TripletMarginWithDistanceLoss

Copy

本小節講解僅剖析nn.L1Loss和nn.CrossEntropyLoss這兩個損失函數及其衍生函數。其餘損失函數可以觸類旁通。 核心知識在於損失函數的實現流程,不同的損失函數僅在於計算公式的不同,每個損失函數處理公式可在官方文檔查閱。

以最簡單的L1Loss出發,觀察pytorch的損失函數是如何實現的

1. L1loss

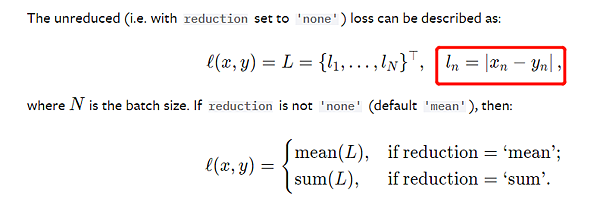

CLASS torch.nn.L1Loss(size_average=None, reduce=None, reduction='mean') 功能: 計算output和target之差的絕對值,可選返回同維度的tensor(reduction=none)或一個標量(reduction=mean/sum)。

計算公式:

參數: size_average (bool, optional) – 已捨棄使用的變數,功能已經由reduction代替實現,仍舊保留是為了舊版本代碼可以正常運行。

reduce (bool, optional) – 已捨棄使用的變數,功能已經由reduction代替實現,仍舊保留是為了舊版本代碼可以正常運行。

reduction (string, optional) – 是否需要對loss進行“降維”,這裡的reduction指是否將loss值進行取平均(mean)、求和(sum)或是保持原尺寸(none),這一變數在pytorch絕大多數損失函數中都有在使用,需要重點理解。

示例:代碼

流程剖析

通過示例代碼可知,loss_func是一個類實例,使用方式是loss_func(output, target)。

而nn.L1Loss是一個什麼類?提供怎麼樣的介面來實現loss_func(output, target)的?

可跳轉進入nn.L1Loss類定義,可以發現它繼承_Loss,繼續觀察_Loss類,發現它繼承nn.Module,既然是一個nn.Module,只需要在其內部實現一個forward()函數,就可以使類實例可以像函數一樣被調用。 請看L1Loss類的實現:

class L1Loss(_Loss):

__constants__ = ['reduction']

def __init__(self, size_average=None, reduce=None, reduction: str = 'mean') -> None:

super(L1Loss, self).__init__(size_average, reduce, reduction)

def forward(self, input: Tensor, target: Tensor) -> Tensor:

return F.l1_loss(input, target, reduction=self.reduction)

Copy

L1Loss的forward函數接收兩個變數,然後計算它們之差的絕對值,具體的實現委託給F.l1_loss函數 繼續進入F.l1_loss一探究竟:

def l1_loss(

input: Tensor,

target: Tensor,

size_average: Optional[bool] = None,

reduce: Optional[bool] = None,

reduction: str = "mean",

) -> Tensor:

if has_torch_function_variadic(input, target):

return handle_torch_function(

l1_loss, (input, target), input, target, size_average=size_average, reduce=reduce, reduction=reduction

)

if not (target.size() == input.size()):

warnings.warn(

"Using a target size ({}) that is different to the input size ({}). "

"This will likely lead to incorrect results due to broadcasting. "

"Please ensure they have the same size.".format(target.size(), input.size()),

stacklevel=2,

)

if size_average is not None or reduce is not None:

reduction = _Reduction.legacy_get_string(size_average, reduce)

expanded_input, expanded_target = torch.broadcast_tensors(input, target)

return torch._C._nn.l1_loss(expanded_input, expanded_target, _Reduction.get_enum(reduction))

Copy

F.l1_loss函數對輸入參數相應的判斷,例如傳入的兩個變數的維度必須一致,否則無法計算l1 loss。

而具體公式的數值計算又委託給了torch._C._nn.l1_loss,torch._C._nn.l1_loss 就已經調用了python的C++拓展,底層代碼是用C++語言編寫,在python中就無法觀察到,從這裡大家可以知道pytorch大量的數值運算是借助了C++語言,畢竟python的底層運算比較慢。

關於C++底層代碼,可依次觀察: https://github.com/pytorch/pytorch/blob/master/torch/csrc/api/src/nn/modules/loss.cpp

#include <torch/nn/modules/loss.h>

namespace F = torch::nn::functional;

namespace torch {

namespace nn {

L1LossImpl::L1LossImpl(const L1LossOptions& options_) : options(options_) {}

void L1LossImpl::reset() {}

void L1LossImpl::pretty_print(std::ostream& stream) const {

stream << "torch::nn::L1Loss()";

}

Tensor L1LossImpl::forward(const Tensor& input, const Tensor& target) {

return F::detail::l1_loss(input, target, options.reduction());

}

Copy

https://github.com/pytorch/pytorch/blob/master/aten/src/ATen/native/Loss.cpp

Tensor& l1_loss_out(const Tensor& input, const Tensor& target, int64_t reduction, Tensor& result) {

if (reduction != Reduction::None) {

auto diff = at::sub(input, target);

auto loss = diff.is_complex() ? diff.abs() : diff.abs_();

if (reduction == Reduction::Mean) {

return at::mean_out(result, loss, IntArrayRef{});

} else {

return at::sum_out(result, loss, IntArrayRef{});

}

} else {

auto diff = input.is_complex() ? at::sub(input, target) : at::sub_out(result, input, target);

return at::abs_out(result, diff);

}

}

Copy

從上述代碼中可以看到,實際的L1Loss公式的實現是

auto diff = at::sub(input, target);

auto loss = diff.is_complex() ? diff.abs() : diff.abs_();

Copy

總結一下,Loss的實現流程如下圖所示: 首先,損失函數繼承Module,並實現forward函數,forward函數中完成具體公式計算;

其次,具體的公式運算委託給nn.functional下函數實現;

最後,pytorch大多的數值運算借助C++代碼實現,具體在ATen/native/Loss.cpp

2. CrossEntropyLoss

CLASS torch.nn.CrossEntropyLoss(weight=None, size_average=None, ignore_index=- 100, reduce=None, reduction='mean', label_smoothing=0.0) 功能:

先將輸入經過softmax啟動函數之後,再計算交叉熵損失。 在早期的pytorch中,是利用nn.LogSoftmax()和 nn.NLLLoss()實現的,現已經通過nn.CrossEntropyLoss()實現,不過官方文檔中仍舊有提示:

V1.11.0: "Note that this case is equivalent to the combination of LogSoftmax and NLLLoss."

V1.6.0: "This criterion combines nn.LogSoftmax() and nn.NLLLoss() in one single class.""

補充:小談交叉熵損失函數

交叉熵損失(cross-entropy Loss) 又稱為對數似然損失(Log-likelihood Loss)、對數損失;二分類時還可稱之為邏輯斯諦回歸損失(Logistic Loss)。交叉熵損失函數運算式為 L = - sigama(y_i * log(x_i))。pytroch這裡不是嚴格意義上的交叉熵損失函數,而是先將input經過softmax啟動函數,將向量“歸一化”成概率形式,然後再與target計算嚴格意義上交叉熵損失。

在多分類任務中,經常採用softmax啟動函數+交叉熵損失函數,因為交叉熵描述了兩個概率分佈的差異,然而神經網路輸出的是向量,並不是概率分佈的形式。所以需要softmax啟動函數將一個向量進行“歸一化”成概率分佈的形式,再採用交叉熵損失函數計算loss。

參數: weight (Tensor, optional) – 類別權重,用於調整各類別的損失重要程度,常用於類別不均衡的情況。 If given, has to be a Tensor of size C

ignore_index (int, optional) – 忽略某些類別不進行loss計算。

size_average (bool, optional) – 已捨棄使用的變數,功能已經由reduction代替實現,仍舊保留是為了舊版本代碼可以正常運行。

reduce (bool, optional) – 已捨棄使用的變數,功能已經由reduction代替實現,仍舊保留是為了舊版本代碼可以正常運行。

reduction (string, optional) – 是否需要對loss進行“降維”,這裡的reduction指是否將loss值進行取平均(mean)、求和(sum)或是保持原尺寸(none),這一變數在pytorch絕大多數損失函數中都有在使用,需要重點理解。

label_smoothing (float, optional) – 標籤平滑參數,一個用於減少方差,防止過擬合的技巧。詳細請看論文《 Rethinking the Inception Architecture for Computer Vision》。通常設置為0.01-0.1之間,雖然理論值域為:A float in [0.0, 1.0].

C++底層代碼實現: https://github.com/pytorch/pytorch/blob/master/aten/src/ATen/native/LossNLL.cpp

Tensor cross_entropy_loss(

const Tensor& self,

const Tensor& target,

const c10::optional<Tensor>& weight,

int64_t reduction,

int64_t ignore_index,

double label_smoothing) {

Tensor ret;

if (self.sizes() == target.sizes()) {

// Assume soft targets when input and target shapes are the same

TORCH_CHECK(at::isFloatingType(target.scalar_type()),

"Expected floating point type for target with class probabilities, got ", target.scalar_type());

TORCH_CHECK(ignore_index < 0, "ignore_index is not supported for floating point target");

// See [Note: hacky wrapper removal for optional tensor]

c10::MaybeOwned<Tensor> weight_maybe_owned = at::borrow_from_optional_tensor(weight);

const Tensor& weight_ = *weight_maybe_owned;

ret = cross_entropy_loss_prob_target(self, target, weight_, reduction, label_smoothing);

} else if (label_smoothing > 0.0) {

TORCH_CHECK(label_smoothing <= 1.0, "label_smoothing must be between 0.0 and 1.0. Got: ", label_smoothing);

// See [Note: hacky wrapper removal for optional tensor]

c10::MaybeOwned<Tensor> weight_maybe_owned = at::borrow_from_optional_tensor(weight);

const Tensor& weight_ = *weight_maybe_owned;

ret = cross_entropy_loss_label_smoothing(self, target, weight_, reduction, ignore_index, label_smoothing);

} else {

auto class_dim = self.dim() == 1 ? 0 : 1;

ret = at::nll_loss_nd(

at::log_softmax(self, class_dim, self.scalar_type()),

target,

weight,

reduction,

ignore_index);

}

return ret;

}

Tensor & nll_loss_out(const Tensor & self, const Tensor & target, const c10::optional<Tensor>& weight_opt, int64_t reduction, int64_t ignore_index, Tensor & output) {

// See [Note: hacky wrapper removal for optional tensor]

c10::MaybeOwned<Tensor> weight_maybe_owned = at::borrow_from_optional_tensor(weight_opt);

const Tensor& weight = *weight_maybe_owned;

Tensor total_weight = at::empty({0}, self.options());

return std::get<0>(at::nll_loss_forward_out(output, total_weight, self, target, weight, reduction, ignore_index));

}

Tensor nll_loss(const Tensor & self, const Tensor & target, const c10::optional<Tensor>& weight_opt, int64_t reduction, int64_t ignore_index) {

// See [Note: hacky wrapper removal for optional tensor]

c10::MaybeOwned<Tensor> weight_maybe_owned = at::borrow_from_optional_tensor(weight_opt);

const Tensor& weight = *weight_maybe_owned;

return std::get<0>(at::nll_loss_forward(self, target, weight, reduction, ignore_index));

}

Tensor nll_loss_nd(

const Tensor& self,

const Tensor& target,

const c10::optional<Tensor>& weight,

int64_t reduction,

int64_t ignore_index) {

if (self.dim() < 1) {

TORCH_CHECK_VALUE(

false, "Expected 1 or more dimensions (got ", self.dim(), ")");

}

if (self.dim() != 1 && self.sizes()[0] != target.sizes()[0]) {

TORCH_CHECK_VALUE(

false,

"Expected input batch_size (",

self.sizes()[0],

") to match target batch_size (",

target.sizes()[0],

").");

}

Tensor ret;

Tensor input_ = self;

Tensor target_ = target;

if (input_.dim() == 1 || input_.dim() == 2) {

ret = at::nll_loss(input_, target_, weight, reduction, ignore_index);

} else if (input_.dim() == 4) {

ret = at::nll_loss2d(input_, target_, weight, reduction, ignore_index);

} else {

// dim == 3 or dim > 4

auto n = input_.sizes()[0];

auto c = input_.sizes()[1];

auto out_size = input_.sizes().slice(2).vec();

out_size.insert(out_size.begin(), n);

if (target_.sizes().slice(1) != input_.sizes().slice(2)) {

TORCH_CHECK(

false,

"Expected target size ",

IntArrayRef(out_size),

", got ",

target_.sizes());

}

input_ = input_.contiguous();

target_ = target_.contiguous();

// support empty batches, see #15870

if (input_.numel() > 0) {

input_ = input_.view({n, c, 1, -1});

} else {

input_ = input_.view({n, c, 0, 0});

}

if (target_.numel() > 0) {

target_ = target_.view({n, 1, -1});

} else {

target_ = target_.view({n, 0, 0});

}

if (reduction != Reduction::None) {

ret = at::nll_loss2d(input_, target_, weight, reduction, ignore_index);

} else {

auto out =

at::nll_loss2d(input_, target_, weight, reduction, ignore_index);

ret = out.view(out_size);

}

}

return ret;

}

Copy

示例:代碼

CrossEntropyLoss使用注意事項:

- target需要的是int類型,不需要one-hot向量形式;

- 類別需要從0開始計數,即10分類任務,類別index應當為0,1,2,3,4,5,6,7,8,9

小結

本小節重點剖析兩個損失函數,學習pytorch損失函數的實現邏輯,請詳細觀察以下關係圖,對後續編寫其它千奇百怪的損失函數很有説明。

在深度學習中,損失函數還有很多,這裡無法一一列舉,感興趣可以瞭解一下:

https://github.com/JunMa11/SegLoss

以及目標檢測中的IoU、GIoU、DIoU、CIoU等。

留言列表

留言列表